Keeping up with an industry as rapidly changing as AI is a challenge. So until AI can do it for you, here’s a quick recap of recent stories in the world of machine learning, as well as notable research and experiments that we couldn’t cover on our own.

In the AI space this week, Google suspended its AI chatbot Gemini’s ability to generate human images after some users complained about historical inaccuracies. For example, when asked to draw a “Roman legion,” Gemini ended up drawing an anachronistic, cartoonish, racially diverse group of infantrymen, while representing the “Zulu warriors” as black. Become.

Google, like other AI vendors including OpenAI, appears to have implemented clumsy hard-coding internally to “fix” bias in its models. To prompts such as “Show me an image of only women” or “Show me an image of only men,” Gemini will refuse, noting that such images “can lead to exclusion and marginalization of the other gender.” He insisted. Gemini also disliked generating images of people identified solely by race, such as “white” or “black”, ostensibly concerned with “reducing individuals to their physical characteristics.”

The right is fixated on the bug as evidence of a “woke” agenda being perpetuated by the tech elite. But you don’t need Occam’s Razor to discover some less sinister truths. That’s something Google has come under fire for in the past for bias in its tools (see below). classify black people as gorillasmistakes thermal gun in black man’s hand as a weaponetc.), desperate to avoid history repeating itself, its image-generating models reveal a less biased world, even if they are wrong.

In her best-selling book, White Fragility, anti-racist educator Robin DiAngelo argues that racial erasure, or in other words, “colorblindness,” is a systemically He writes about how it contributes to imbalances in species power. By claiming to be “color blind” or by reinforcing the idea that acknowledging the struggles of people of other races is enough to label oneself “woke”, people last forever DiAngelo says circumventing substantial protections on this topic could be harmful.

Google’s ginger treatment of race-based prompts in Gemini didn’t avoid the problem in itself, but it disingenuously tried to hide the model’s worst biases. Some people may argue (and many people have) These biases should not be ignored or ignored, but rather addressed in the broader context of the training data in which they arise: society on the World Wide Web.

Yes, the data sets used to train image generators typically include more white people than black people, and images of black people in those data sets reinforce negative stereotypes.That’s why image generator sexualize certain women of color, Depicting a white man in a position of authority and generally favorable A wealthy Westerner’s perspective.

Some may argue that AI vendors have no chance. Whether they choose to address the model’s biases or not, they will be criticized. That’s true. But in any case, I would argue that these models lack explanation and are packaged in a way that minimizes the manifestation of bias.

If AI vendors address the shortcomings of their models head-on in humble and transparent terms, they will go much further than haphazard attempts to “fix” inherently unfixable biases. . The truth is, we all have biases that prevent us from treating people the same. So are the models we’re building. And it’s better to admit that.

Here are some other notable AI stories from the past few days.

- Women in AI: TechCrunch has launched a series highlighting notable women in AI. Read the list here.

- Stable diffusion v3: Stability AI announced Stable Diffusion 3, the latest and most powerful version of its image generation AI model, based on a new architecture.

- Chrome gets GenAI. Chrome’s new Gemini-powered tools allow users to rewrite existing text on the web or generate something entirely new.

- Blacker than ChatGPT: Creative advertising agency McKinney developed the quiz game ‘Are You Blacker than ChatGPT?’ to highlight bias in AI.

- Legal requirements: Hundreds of AI celebrities signed an open letter earlier this week calling for anti-deepfake legislation in the US.

- Matching by AI: OpenAI has won a new customer in Match Group, owner of apps like Hinge, Tinder, and Match, whose employees will use OpenAI’s AI technology to perform work-related tasks. Masu.

- DeepMind Safety: DeepMind, Google’s AI research division, has created a new organization called AI Safety and Alignment. The organization is comprised of existing teams working on AI safety, but has also expanded to include a new specialized group of GenAI researchers and engineers.

- Open model: Just a week after launching the latest iteration of its Gemini models, Google has released Gemma, a new family of lightweight openweight models.

- House task force: The U.S. House of Representatives has created a special committee on AI, but as Devin writes, it feels like we’re stuck in a quandary, with no sign of an end to years of indecision.

More machine learning

AI models seem to know a lot, but what do they actually know? Well, the answer is nothing. But to phrase this question a little differently…they seem to have internalized some “meanings” similar to those known to humans. No AI can truly understand what a cat or a dog is, but is it possible that the embeddings of these two words encode a different sense of similarity than, say, a cat and a bottle? ? Amazon researchers believe so.

In their study, they identified “trajectories” of similar but different sentences, such as “The dog barked at the thief” and “The thief made the dog bark,” and grammatical trajectories, such as “The cat sleeps all day.” We compared the “trajectories” of similar but different sentences. and “The girls have been jogging all afternoon.” They say that the things humans perceive to be similar are actually the things that are internally more similar, even though they are grammatically different. I discovered that things that are grammatically similar are the opposite. OK, I think this paragraph was a bit confusing, but suffice it to say that the meaning encoded in LLM is not entirely straightforward and seems to be more robust and sophisticated than expected.

Neural encoding has proven useful for prosthetic vision. Discovered by Swiss researchers at EPFL. Retinal prostheses and other methods of replacing parts of the human visual system generally have very limited resolution due to the limitations of microelectrode arrays. Therefore, no matter how detailed the image comes in, it must be transmitted at very low fidelity. But there are many different ways to downsample, and this team found that machine learning does a great job with it.

Image credits: EPFL

“We found that applying a learning-based approach yielded improved results in terms of optimized sensory encoding. But what was even more surprising was that using an unconstrained neural network could improve retinal processing that it has learned to independently mimic aspects of ,” Diego Ghezzi said in a news release. It basically performs perceptual compression. They tested it on mouse retinas, so it’s not just a theory.

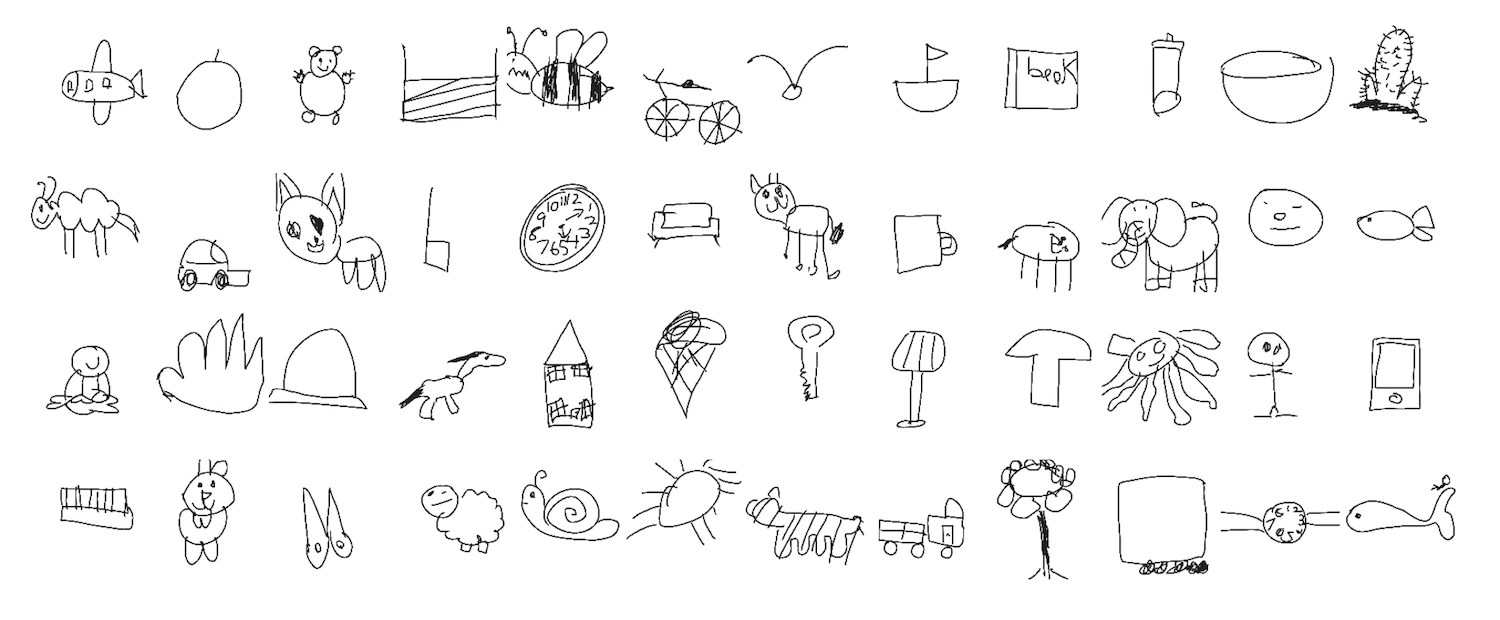

An interesting application of computer vision by researchers at Stanford University hints at the mystery of how children develop drawing skills. The research team recruited and analyzed her 37,000 drawings of different objects and animals drawn by children, and also analyzed how well each drawing was recognized (based on children’s responses). Did. Interestingly, it wasn’t just the inclusion of distinctive elements such as bunny ears that made the drawings more recognizable to other children.

“The types of features that lead older children to recognize their drawings do not seem to be determined solely by a single feature that all older children learn to include in their drawings. What they’re doing is something more complex,” said lead researcher Judith Huang.

Chemist (also at EPFL) found LLMs can also be surprisingly skilled at helping you with the job after minimal training. It’s not just about doing chemistry directly, it’s about fine-tuning a series of tasks that it’s impossible for an individual chemist to know everything about. For example, in thousands of papers, there may be hundreds of statements about whether a high-entropy alloy is single-phase or multi-phase (you don’t need to know what this means, in fact ). This system (based on GPT-3) can be trained on these types of yes/no questions and answers and quickly learn to make inferences from them.

This is not a huge advance, it just adds to the evidence that LLM is a useful tool in this sense. “Importantly, this is as easy as doing a literature search and is useful for many chemical problems,” said researcher Berend Smit. “Querying the underlying model may become a routine way to bootstrap a project.”

last, Warning from Berkeley researchers, but now that I read this post again, I see that EPFL was also involved in this. Let’s go to Lausanne! The researchers found that images found via Google were far more likely to enforce gender stereotypes about certain occupations or words than text that mentioned the same content. And in both cases there were far more men present.

Not only that, but in experiments, people who looked at images rather than read text when researching a role were able to more reliably associate the role with one gender, even days later. “This is not just about the frequency of gender bias online,” said researcher Douglas Guilbeault. “Part of the story here is that there’s something very compelling and very powerful about the representation of people through images that text doesn’t have.”

With things like the Google Image Generator diversity uproar going on, many AI model data sources exhibit significant bias, and there is a well-established and frequent evidence that this bias has a real impact on people. It’s easy to lose sight of the proven facts.