Robotics developer Figure made headlines on Wednesday by releasing a video demonstration of its first humanoid robot that engages in real-time conversations thanks to OpenAI’s generative AI.

“With OpenAI, Figure 01 can now have full conversations with people”, Figure Said Twitter emphasizes the ability to instantly understand and react to human interactions.

The company explained that its recent partnership with OpenAI will bring high levels of visual and linguistic intelligence to its robots, enabling “high-speed, low-level dexterous robot movements.”

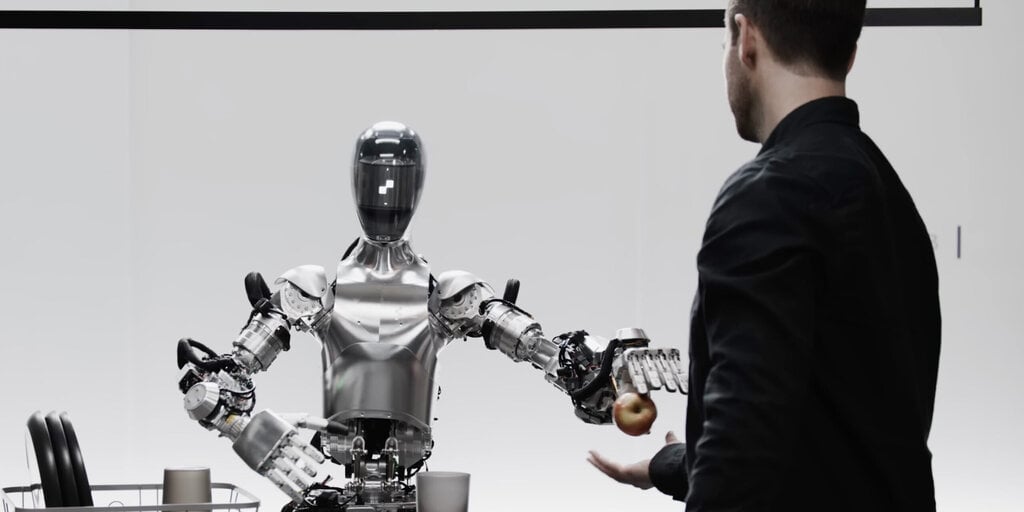

In the video, Figure 01 interacts with its creator, senior AI engineer Corey Lynch, who has the robot perform several tasks in his makeshift kitchen, including identifying apples, plates, and cups. .

Figure 01 shows that when Lynch asked the robot to eat something, it identified an apple as food. Lynch then had Figure 01 collect trash in a basket and ask questions at the same time, showing off the robot’s multitasking abilities.

lynching on twitter explained Figure 01 Let’s explain the project in more detail.

“Our robot can describe visual experiences, plan future actions, reflect on memories, and verbally explain its reasoning,” he wrote in a wide-ranging thread.

Lynch said they will feed images from the robot’s camera and transcribe audio text captured by its onboard microphone into a large multimodal model trained on OpenAI.

Multimodal AI refers to artificial intelligence that can understand and generate different types of data, such as text and images.

Lynch emphasized that Figure 01’s behavior is learned, performed at normal speed, and not remotely controlled.

“This model processes the entire history of the conversation, including past images, to derive a verbal response that is then passed back to the human through text-to-speech,” Lynch said. “The same model determines which learned closed-loop behavior to perform on the robot to execute a specific command and loads specific neural network weights onto the GPU to execute the policy.”

Mr. Lynch said that Figure 01 was designed to provide a concise description of the surroundings;common sense” Make decisions such as guessing which plate will be placed on the rack. It is also possible to parse ambiguous statements such as being hungry into actions such as offering an apple, and explain that action.

The debut sparked an enthusiastic response on Twitter, with many impressed by Figure 01’s capabilities and adding it to their list of mileposts toward the singularity.

Please tell me your team watched all the Terminator movies,” one person responded.

“We need to find John Connor ASAP,” added another.

For AI developers and researchers, Lynch provided many technical details.

“All behavior is driven by the neural network’s visuomotor transformation policies, which directly map pixels to actions,” Lynch said. “These networks capture onboard images at 10 Hz, and at 200 Hz they generate 24 degrees of freedom actions (wrist pose and finger joint angles).”

Figure 01’s shocking debut comes as policymakers and global leaders grapple with bringing AI tools into the mainstream. While most of the discussion is about large-scale language models such as OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude AI, developers are also exploring ways to give AI physical bodies like humanoid robots. ing.

Figure AI and OpenAI did not respond immediately Decryption Request for comments.

“One is a kind of utilitarian objective, and that’s what Elon Musk and others are aiming for,” Ken Goldberg, a professor at the University of California, Berkeley, previously said. Decryption. “A lot of the work that’s going on right now, the reason people are investing in companies like Figure, is because they expect these things to work well and be compatible,” he said, especially in space. He said in the field of exploration.

Alongside Figure, working on the fusion of AI and robotics is Hanson Robotics, which debuted its Desdemona AI robot in 2016.

“Even just a few years ago, I believed we would have to wait decades before a humanoid robot could fully converse while planning and executing fully learned actions on its own. I would have thought so,” said a senior AI engineer at Figure AI. Corey Lynch said on Twitter: “Obviously, a lot of things have changed.”

Edited by Ryan Ozawa.