Let’s start with an inconvenient truth. We have lost control over artificial intelligence.

This isn’t all that surprising considering we likely had no control over it. The upheaval at OpenAI over the sudden firing of CEO Sam Altman has raised questions about accountability within one of the world’s most powerful AI companies. But even before that boardroom drama, our understanding of how AI is created and used was limited.

Lawmakers around the world are struggling to keep up with the pace of AI innovation and are unable to provide a basic framework for regulation and oversight. The conflict between Israel and Hamas in the Gaza Strip is making things even more dangerous. AI systems are currently being used to identify survivors and dead in Gaza. The results are obvious for all to see, and they are frightening.

In an extensive study conducted by an Israeli publication +972 Magazinejournalist Yuval Abraham spoke with several current and former officials about the Israeli military’s advanced AI war plan, dubbed “The Gospel.”

Officials said The Gospel creates AI-generated targeting recommendations through “rapid and automated extraction of information.” Recommendations are matched against identifications performed by human soldiers. The system relies on a matrix of data points with a checkered history of false positives, such as: facial recognition technology.

As a result, “military” targets are being produced in Gaza at an alarming rate. In previous Israeli operations, a lack of targets has slowed military operations because humans have taken time to identify targets and assess the likelihood of civilian casualties. The Gospel has dramatically accelerated this process.

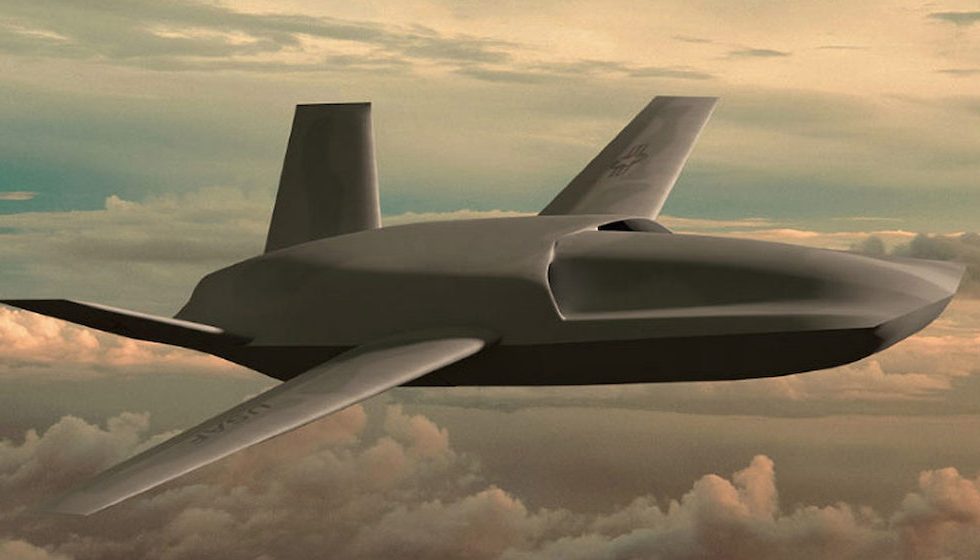

Thanks to the ‘Gospel’, Israeli warplanes could no longer keep up with the number of targets provided by these vehicle systems. The sheer scale of the death toll in the past six weeks of fighting demonstrates the deadly nature of this new technology of warfare.

According to Gaza officials, 17,000 people including at least 6,000 children. Citing several reports, American journalist Nicholas Kristoff said: said “Since the war began in Gaza, a woman or child has been killed on average approximately every seven minutes in 24 hours.”

“Look at the physical landscape of Gaza,” says Richard Moyes, a researcher with Article 36, a group that campaigns to reduce the damage caused by weapons. told the Guardian. “We have seen widespread flattening of urban areas by heavy explosive weapons, so claims that the precision and range of force used is narrow are not supported by the facts. ”

look to Gaza

Military forces around the world with similar AI capabilities are closely monitoring Israel’s attack on Gaza. Lessons learned in Gaza will be used to improve other AI platforms for use in future conflicts. The genie is out of the bottle. Future automated warfare will use computer programs to decide who lives and who dies.

While Israel continues to attack Gaza with AI-guided missiles, governments and regulators around the world need help keeping up with the pace of AI innovation taking place in the private sector. Lawmakers and regulators cannot keep up with new programs or those being created.

new york times I am making a note of “That gap is exacerbated by a lack of AI knowledge in governments and labyrinthine bureaucracies, and concerns that too many rules will inadvertently limit the benefits of the technology.”

As a result, AI companies will be able to develop with little or no oversight. The situation is so dramatic that we don’t even know what these companies are working on.

Consider the management debacle at OpenAI, which runs the popular AI platform ChatGPT. When CEO Sam Altman was unexpectedly fired, internet gossip began to focus on unconfirmed reports that he was developed by OpenAI. Secret powerful AI It can change the world in unexpected ways. Internal disagreements over its usage led to a crisis in the company’s management.

We may never know if this rumor is true, but given the trajectory of AI and the fact that we don’t understand what OpenAI is doing, it seems plausible. The problem is that the public and lawmakers don’t have clear answers about the potential of super-powerful AI platforms.

The Gospel of Israel and OpenAI mess mark a tipping point in AI. It’s time to move beyond the empty elevator pitch that AI will usher in a brave new world.

AI may help humanity achieve new goals, but it will not be a force for good if it is developed in the shadows and used to kill people on the battlefield. Regulators and lawmakers are unable to keep up with technological advances and do not have the tools to exercise sound oversight.

As powerful governments around the world watch Israel test AI algorithms on Palestinians, we cannot have false hopes that this technology will only be used for good. Given the failure of regulators to establish guardrails for the technology, we can hope that the narrow interests of consumer capitalism will act as the rulers of where AI truly transforms society.

It’s a vain hope, but that’s probably all there is to it at this point.

This article is provided by syndicate office, Copyrighted material.