This article originally appeared on Open Source, a weekly newsletter about emerging technologies. To receive articles like this in your inbox, Subscribe here.

December is typically a quiet month for businesses, but at Google we’re busier than ever. On December 6, the technology giant announced that geminiits latest artificial intelligence model.

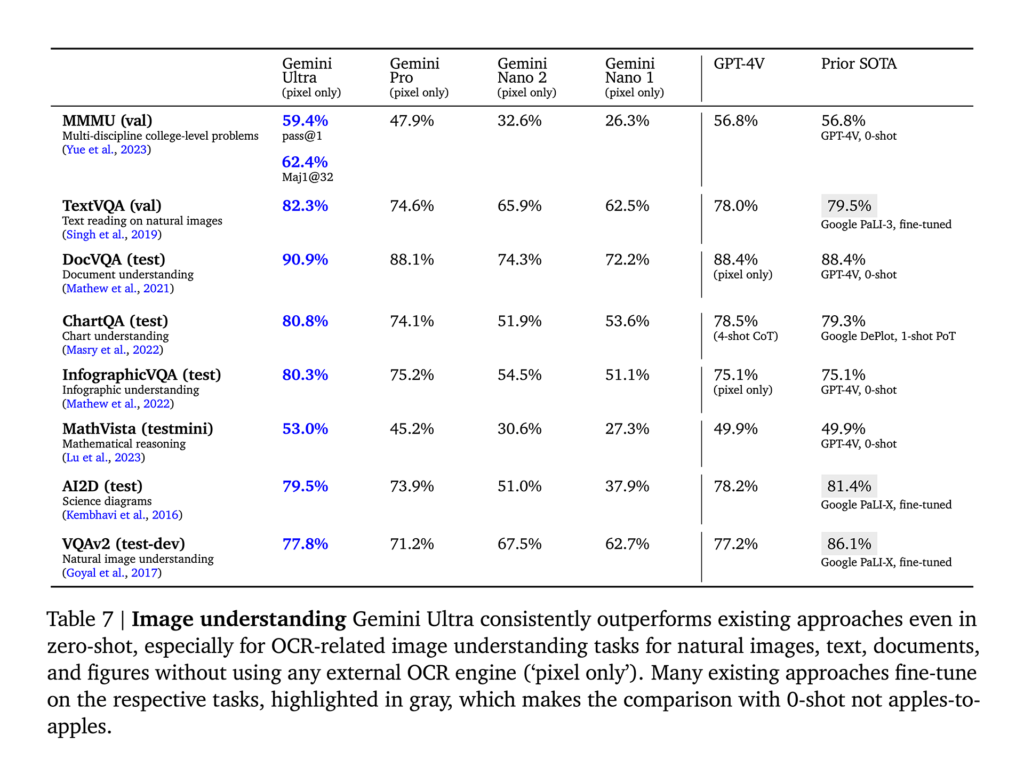

According to Google, Gemini is a fundamentally new kind of AI model. It can understand not only text but also images, videos, and audio. The company also claimed that Gemini is its most powerful model to date, outperforming OpenAI’s GPT-4 in 30 out of 32 standard performance ratings.

as multimodal The model describes Gemini as being able to complete complex tasks across a variety of disciplines. Google launched Gemini with a demo that showed how to write code, explain math problems, identify similarities between images, understand emojis, and more.

Three versions of Gemini will be released in stages.

- gemini ultraThe most powerful variants are tailored for complex tasks such as scientific research and drug discovery. The release is scheduled for 2024 and promises groundbreaking features.

- gemini proGeneric models can perform a variety of tasks including chatbots, virtual assistants, content generation, and more. Developers and enterprise customers can now access it through Google’s Generative AI Studio or Google Cloud’s Vertex AI. It is also built into Bard to solve prompts that require advanced reasoning, planning, and understanding.

- gemini nanoThe most efficient version is designed for on-device tasks and brings AI capabilities natively to Android devices. It’s built into the Pixel 8 Pro to handle tasks like summarizing information.

Why is it becoming a hot topic?

Google’s claims that Gemini is superior to GPT-4 have understandably attracted widespread attention and scrutiny, as GPT-4 has traditionally been considered the industry gold standard. Since Google’s claims are unlikely to be unfounded, curiosity is focused on the next big question: Will it improve, but by how much?

As it turns out, there aren’t that many.

according to result Google DeepMind announced that Gemini outperformed GPT-4 in the majority of measurements (30 out of 32), although the differences were small. These results come from tests that evaluate the model’s performance across a variety of tasks including text, images, and combinations of these modalities.

- For text-only questions, Gemini Ultra scored 90% and GPT-4 scored 87.3% on the Massive Multitasking Language Understanding (MMLU) benchmark.

- When it comes to multimodal tasks, Gemini Ultra’s score was 59.4%, slightly higher than GPT-4’s 56.8% on the Massive Multidisciplinary Multimodal Understanding (MMMU) benchmark.

Despite the small difference, Gemini does not justify scathing reviews. Considering Google’s late entry into the AI race, the tech giant has made great strides to catch up, if not surpass, his one of the most serious competitors in the AI field. I accomplished that.

If anything, Google deserves more criticism for the way it produced Gemini’s demo video. It reduces its latency, truncates its model output for brevity, and uses audio overlays to (misleadingly) communicate Gemini as an all-around powerful AI with real capabilities. Response time to user inquiries. That’s good, but still not that good.

height=”278″ Frameborder=”0″ allowedfullscreen=”allowfullscreen” data-ytbridge=”vidSurrogate2″>

big picture

Unless you are an expert in AI or a related field, benchmark test results may look mediocre and provide little insight beyond identifying which models are better at a particular task.

That’s perfectly fine, because a benchmark is just a benchmark. After all, the true test of Gemini’s capabilities (or any other AI model, for that matter) comes from everyday users like you, who use Gemini to brainstorm ideas, find information, write code, and more. It should be done.

From this perspective, Gemini is pretty cool, but not revolutionary, at least not yet.

Gemini has an advantage over GPT-4 because in addition to the information it can collect from the Internet, it also has access to information from widely used search engines. OpenAI primarily works with the latter. Furthermore, in the case of semi-analysis, Claim If proven accurate, Gemini could leverage significantly more computing power than GPT-4 thanks to Google’s easy access to top-tier chips. Gemini’s excellent results on the MMLU/MMMU benchmark become even less surprising when viewed against this background.

There are often bigger, better questions that need answering about more important aspects of your AI model. For example, are Geminis less prone to hallucinations and fabrications, like most current Large Language Models (LLMs), or are they more immune?

Google is eager to impress with Gemini, but even the company is quick to temper expectations. Demis Hassabis, co-founder and CEO of Google DeepMind, said: Said wired “LLM needs to be combined with other AI techniques to provide AI systems that can understand the world in ways that chatbots today cannot.”

Hassabis is probably right. What he was hinting at was general artificial intelligence, AGI for short. This is something that major companies are clearly aware of and are actively working on developing. OpenAI Breakthrough In this respect, the project has since been called Q* (Q Star).

As more AI companies enter the fray and introduce new LLMs and related technologies, achieving AGI seems inevitable, but the timeline may be too long for the tastes of enthusiasts and innovators. there is.

After OpenAI introduced ChatGPT to great acclaim, even initially satisfied Google entered the AI race. The response, first at Bird and now at Gemini, may not be groundbreaking, but it illustrates how latecomers can close the gap through concerted efforts. If anything, this should be a major revelation and the biggest takeaway for other AI companies looking to catch up with the big players.