After nearly three days of marathon “final” talks, European Union lawmakers tonight struck a political agreement on a risk-based framework for regulating artificial intelligence. The file was originally proposed in April 2021, but it took months of tricky three-way negotiations to cross the line and reach an agreement. This development means that pan-EU AI legislation is firmly on the way.

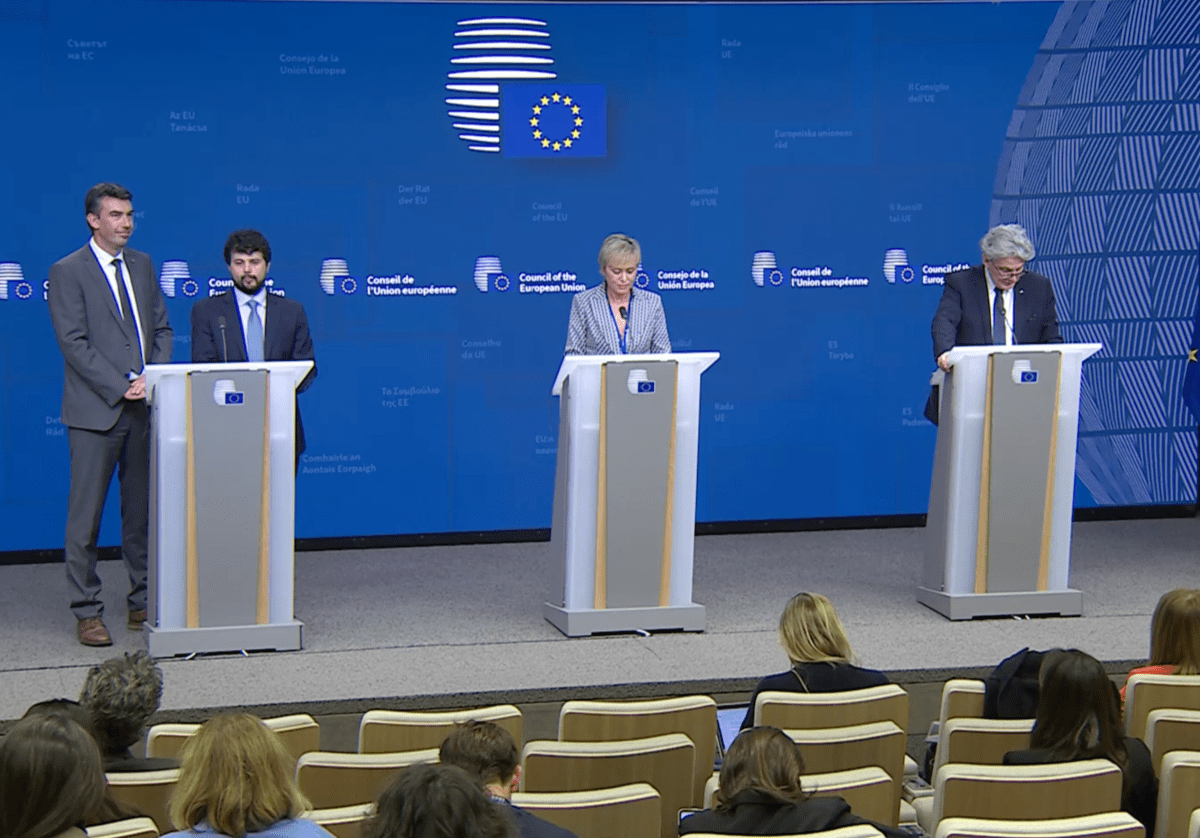

In a short period of time between Friday night and Saturday morning local time, key representatives of the European Parliament, the Council and the European Commission (co-members of the bloc) held a triumphant but exhausted press conference. , hailed the agreement as a landmark and historic outcome after a hard-fought battle. Each.

go to X Tweet the newsEU President Ursula von der Leyen, who made AI legislation a key priority of her term when she took office at the end of 2019, also hailed the political agreement as a “world first”.

The full details of the agreement will not be fully confirmed until the final document is compiled and published, which could take several weeks. but, press release A report published by the European Parliament confirms that the agreement reached with the Council includes a complete ban on the use of AI for the following purposes:

- Biometric classification systems that use sensitive characteristics (political, religious, philosophical beliefs, sexual orientation, race, etc.).

- Non-targeted scraping of facial images from the internet or surveillance camera footage to create a facial recognition database.

- Emotion recognition in the workplace and educational institutions.

- Social scoring based on social behavior and personal characteristics.

- AI systems that manipulate human behavior to circumvent human free will.

- AI used to exploit people’s vulnerabilities (due to age, disability, social or economic status).

Although the use of remote biometric technology in public places by law enforcement is not completely prohibited, Congress has created a series of safeguards and narrow exceptions to help negotiators limit the use of technology such as facial recognition. He said that he agreed. It includes a requirement for prior judicial clearance and limits its use to a “strictly defined” list of crimes.

Retrospective (non-real-time) use of remote biometric ID AI is limited to “targeted searches for persons suspected of or convicted of serious crimes.” Real-time use of this intrusive AI technology is limited by time and location, and may only be used to:

- Targeted search for victims (kidnapping, human trafficking, sexual exploitation);

- prevention of certain current terrorist threats, or

- The location of a person suspected of having committed one of the specified crimes listed in the regulations (terrorism, human trafficking, sexual exploitation, murder, kidnapping, rape, armed robbery, participation in a criminal organization, environmental crimes, etc.) specific or specific.

The agreed package includes provisions for AI systems classified as “high risk” as “having the potential to cause serious potential harm to health, safety, fundamental rights, the environment, democracy and the rule of law”. It also includes duties.

“MEPs succeeded in including, among other requirements, a mandatory fundamental rights impact assessment that also applies to the insurance and banking sectors. “AI systems that are used in the United States are also classified as high risk,” Congress wrote. “Citizens will have the right to complain about AI systems and be held accountable for decisions based on high-risk AI systems that affect their rights.”

Also agreed was a “two-tier” system of guardrails that would apply to “common” AI systems, such as the so-called foundational model that underpins the viral boom in generative AI applications like ChatGPT.

As we previously reported, the agreement reached on Foundational Model/General Purpose AI (GPAI) includes transparency requirements for what co-legislators called “low-tier” AI. This means that modelers must create technical documentation and produce (and publish) detailed data. An overview of the content used in training to support compliance with EU copyright law.

“High-impact” GPAI (defined as more than 10^25 cumulative amount of compute used for training, measured in floating-point arithmetic) with so-called “systemic risk” is subject to stricter obligations. there is.

“If these models meet certain criteria, we will conduct model assessments, assess and mitigate system risks, conduct adversarial testing, report significant incidents to the commission, and ensure cybersecurity.” and report energy efficiency,” the council wrote. “MEPs also argued that until harmonized EU standards are published, GPAIs with systemic risks may rely on implementing regulations to comply with regulations.”

The commission has been working on a stopgap AI agreement with industry for several months. And today we have confirmed that this is aimed at closing the gap in practice until the AI Act comes into force.

Commercialized underlying models/GPAI will face regulation under the Act, but research and development is not intended to be subject to the Act, and today’s announcement indicates that fully open source models will be closed. Less regulatory requirements than sources.

The agreed package also facilitates regulatory sandboxes and real-world testing established by national authorities to help start-ups and SMEs develop and train AI before it goes to market. will be done.

Penalties for violations can range from €35 million or 7% of global turnover to €7.5 million or 1.5% of turnover, depending on the offense and the size of the company.

The agreement agreed today also allows for gradual entry into force after the law is adopted, giving six months for rules on prohibited uses to come into force. 12 months for transparency and governance requirements. 24 months for all other requirements. Therefore, the full effect of the EU’s AI law may not be felt until 2026.

Spain has held the rotating Council Presidency since the summer, and Carme Artigas, Secretary of State for Digital and AI Affairs, who led the Council’s negotiations on the file, called the agreement on the hotly contested file “historic. “It’s the biggest milestone ever.” The Diffusion of Digital Information in Europe”. It’s not just for the bloc’s Single Digital Market, she suggested, but also “for the world”.

“We have achieved the world’s first international regulation of artificial intelligence,” she announced at a late-night press conference to confirm the political agreement, adding, “We are very proud.” .

She predicted that the law would support European developers, start-ups and future scale-ups by giving them “legal certainty as well as technical certainty”.

On behalf of the European Parliament, Co-Rapporteurs Dragóš Tudrače and Brand Benifay, AI will ensure that the ecosystem develops with a “human-centred approach” that respects fundamental rights and European values. He said the purpose was to enact the law. Their assessment of the outcome was similarly optimistic, citing the agreement’s inclusion of predictive enforcement and a complete ban on the use of AI for biometric classification as major achievements.

“We are finally on the right track and protecting the fundamental rights that our democracy needs to withstand such extraordinary change,” Benifay said. “We are the first in the world to take this direction on fundamental rights, support the development of AI on the continent, and enact modern, horizontal legislation on the frontiers of artificial intelligence with the most powerful models. It’s a company. With clear mandates. So I think it’s paying off.”

“We have always questioned whether there are enough protections in this document, whether there are enough elements to stimulate innovation, and I can say that this balance is struck,” Tudras said. added. “We have the safeguards in place, we have all the necessary regulations in place, we have the necessary remedies in place to give the public confidence in the products of the services they will interact with and in their interactions with AI.

“We need to use this blueprint now to aim for global convergence because this is a global challenge for everyone. And given all previous precedent, this Although the task up to this point was difficult, by any standard it was a marathon negotiation, and although difficult, I believe we accomplished it.”

Thierry Breton, the EU’s internal market commissioner, also donated two euro cents and said the deal, which was reached shortly before midnight Brussels time, was “historic”. “It’s the full package. It’s the complete deal. This is why we spent so much time on it,” he said in unison. “This is about balancing user safety and startup innovation, while also respecting our fundamental rights and European values.”

Despite the EU clearly giving itself a pat on the back tonight by securing agreement on ‘world-first’ AI rules, the EU’s legislative process is far from over, especially as formal steps remain. do not have. The final document will be adopted after a vote in Parliament and the Council. However, given how much division and disagreement there has been over how (or whether) AI should be regulated, the biggest hurdle has been removed by this political agreement and will likely occur in the coming months. The path to passing an EU AI law appears clear.

The European Commission certainly shows confidence. According to Breton, work to implement the agreement will begin immediately with the establishment of his AI office within the EU Executive Office. The AI Office will be responsible for coordinating with supervisory authorities in member states that need to enforce rules for AI companies. “We welcome new colleagues…many of them,” he said. “We will start preparing from tomorrow.”