Meta-learning, a rapidly growing field of AI research, has made significant progress in training neural networks to rapidly adapt to new tasks with minimal data. This technique focuses on exposing neural networks to diverse tasks, thereby cultivating versatile representations essential for common problem solving. These various exposures are aimed at developing universal capabilities for AI systems, an important step towards the grand vision of artificial general intelligence (AGI).

The main challenge in meta-learning is creating task distributions that are wide enough to expose the model to a wide range of structures and patterns. Achieving this broad exposure is fundamental to fostering universal representation in AI models, which is essential for tackling diverse problems. This work is at the core of evolving more adaptive and generalized AI systems.

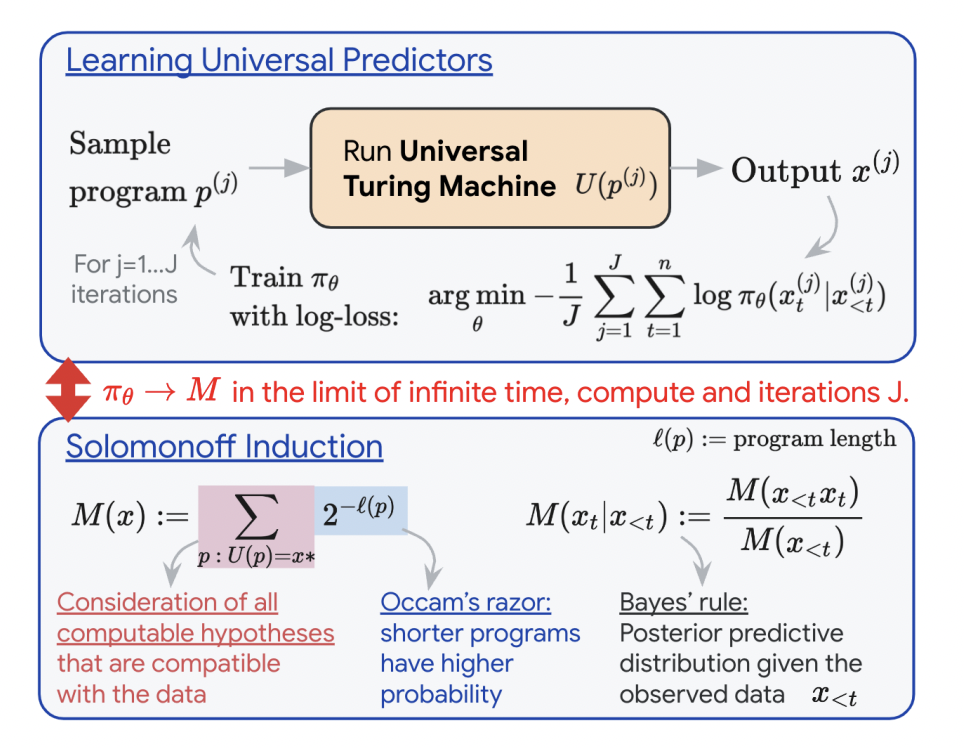

For universal predictions, current strategies often incorporate fundamental principles such as Occam’s razor, which favors simpler hypotheses, and Bayesian updating, which refines beliefs with new data. However, these traditional approaches have practical limitations, mainly the computational resources required. In response, an approximation of Solomonov induction was developed. Solomonov induction is a theoretical framework that aims to build an ideal universal prediction system, but its practical application is hampered by computational demands.

Recent work at Google DeepMind breaks new ground by integrating Solomonov induction with neural networks through meta-learning. The researchers employed Universal Turing Machines (UTMs) for data generation, effectively exposing neural networks to a wide range of computable patterns. This exposure is critical in steering the network toward mastering universal inductive strategies.

The methodology employed by DeepMind employs established neural architectures such as transformers and LSTMs, along with innovative algorithmic data generators. The focus is not just on architectural choices. This includes developing appropriate training protocols. This comprehensive approach includes a thorough theoretical analysis and extensive experiments to evaluate the effectiveness of the training process and the resulting functionality of the neural network.

DeepMind experiments have shown that increasing the size of the model improves performance. This suggests that scaling up the model can help facilitate the learning of more universal prediction strategies. In particular, her Transformer at scale, trained on UTM data, showed the ability to effectively transfer its knowledge to a variety of other tasks. This indicates that these models have developed the ability to internalize and reuse universal patterns.

Both large-scale LSTMs and transformers demonstrated optimal performance in scenarios involving variable-order Markov sources. This is an important finding because it highlights the ability of these models to effectively model Bayesian mixtures across programs, which is essential for Solomonov induction. This result is noteworthy because it demonstrates the model’s ability to fit the data and understand and reproduce the underlying generative process.

In conclusion, Google DeepMind’s research represents a major advance in AI and machine learning. This reveals the promising potential of meta-learning in equipping neural networks with the skills needed for universal prediction strategies. This study focuses on the use of UTM for data generation and a balanced emphasis on theoretical and practical aspects of training protocols in the development of more versatile and generalized AI systems. It represents a very important step forward. The findings open new avenues for future research to build AI systems with enhanced learning and problem-solving capabilities.

Please check paper. All credit for this study goes to the researchers of this project.Don’t forget to follow us twitter and google news.participate 36,000+ ML SubReddits, 41,000+ Facebook communities, Discord channeland linkedin groupsHmm.

If you like what we do, you’ll love Newsletter..

Don’t forget to join us telegram channel

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a new perspective to the intersection of AI and real-world solutions.

[FREE AI WEBINAR] “Using ANN for Fast, Large-Scale Vector Search (Demo on AWS)” (February 5, 2024)

[FREE AI WEBINAR] “Using ANN for Fast, Large-Scale Vector Search (Demo on AWS)” (February 5, 2024)