Orrich Lawson | Getty Images

AI assistants have been widely available for a little over a year, and already have access to our most private thoughts and trade secrets. People ask about pregnancy, abortion, and prevention; they ask for advice when considering divorce; they ask for information about drug addiction; they ask for emails containing proprietary trade secrets to be redacted. Providers of these AI-powered chat services are acutely aware of the sensitive nature of such discussions, and do so primarily to protect other people’s interactions from being read by anyone who might be able to snoop on them. We are taking proactive steps in the form of encryption.

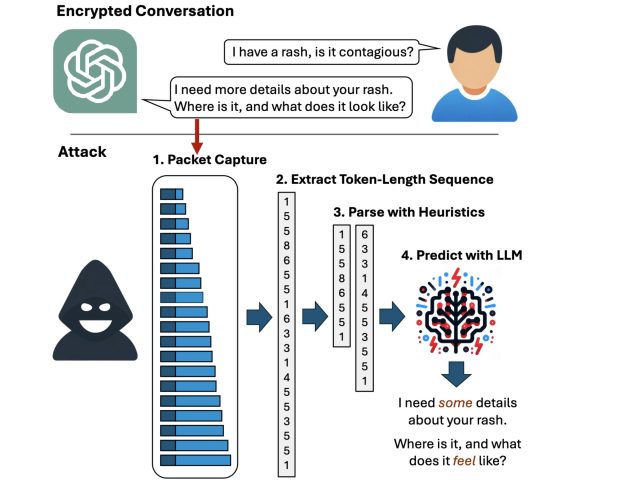

But now researchers have devised an attack that decodes the AI assistant’s responses with surprising accuracy. This technology is side channel Included in all major AI assistants except Google Gemini. It then refines the fairly raw results through a large language model trained specifically for the task.Result: Passive person enemy in the middle Location (meaning an adversary that can observe the data packets passing between the AI assistant and the user) can infer the specific topic of 55 percent of all captured responses, typically with high word accuracy. This attack can estimate a response with perfect word accuracy 29% of the time.

Token privacy

“Now, anyone can read private chats sent from ChatGPT and other services,” said Yisroel Mirsky, the service’s director. Offensive AI Research Institute at Ben-Gurion University in Israel, he wrote in an email. “This includes malicious actors on the same Wi-Fi or LAN as the client (e.g., in the same coffee shop), or even malicious actors on the Internet, anyone who can observe the traffic. This attack is passive and can occur without OpenAI or its clients knowing. OpenAI encrypts its traffic to prevent this type of eavesdropping attack, but our research shows that There is a flaw in the way OpenAI uses encryption, which allows the contents of the message to be leaked.”

Although Mirsky was referring to OpenAI, all other major chatbots are also affected, with the exception of Google Gemini. As an example, an attack can infer his encrypted ChatGPT responses.

- Yes, there are some important Legal considerations couple Things to keep in mind when considering divorce…

As:

- Yes, there are some potential Legal considerations who What you need to know when considering divorce. …

Microsoft Copilot encrypted response:

- Below are some of the latest research results. effective teaching methods For students with learning disabilities: …

is inferred as follows.

- Below are some of the latest research results. cognitive behavioral therapy For children with learning disabilities: …

The underlined words indicate that the exact expression is not perfect, but the estimated meaning of the sentence is very accurate.

Weiss et al.

The following video shows an actual attack on Microsoft Copilot.

A token-length sequence side-channel attack against Bing.

Side channels obtain sensitive information from a system through indirect or unintended sources, such as physical manifestations or behavioral characteristics such as power consumption, duration, or sound, light, or electromagnetic radiation produced during a particular operation. It’s a means. Surgery. By carefully monitoring these sources, an attacker can learn enough information to recover encrypted keystrokes and encryption keys from a CPU, browser cookies from HTTPS traffic, or secrets from a smart card. Can be collected. The side channel used in this latest attack resides in the token that the AI assistant uses when responding. User queries.

Tokens are similar to words that are encoded in a way that LLM understands. To improve the user experience, most AI assistants send tokens on-the-fly as soon as they are generated. This allows the end user to continuously receive the response word by word as it is generated, rather than receiving the response all at once, much later. The assistant generated the entire answer. Although the token delivery is encrypted, the real-time token-by-token transmission exposes a previously unknown side channel that researchers refer to as “token length sequences.”