This recommendation: 50 page document Microsoft on Tuesday unveiled a broad vision for how governments should approach AI.

Lawmakers and regulators across the country Discuss how to regulate AICompanies developing new technologies have issued a number of proposals for how politicians should handle the industry.

Microsoft has long been accustomed to lobbying governments on issues that affect its business, and has sought to position itself as an active and helpful company trying to shape the debate and ultimate legislative outcomes by aggressively pushing for regulations.

Smaller technology companies and venture capitalists are skeptical of the approach, accusing big AI companies like Microsoft, Google and OpenAI of trying to pass legislation that would make it harder for startups to compete with them. Supporters of the legislation, including California politicians who are leading the nation in passing broad AI legislation, say the government’s early failure to regulate social media use could allow problems like cyberbullying and misinformation to flourish unchecked.

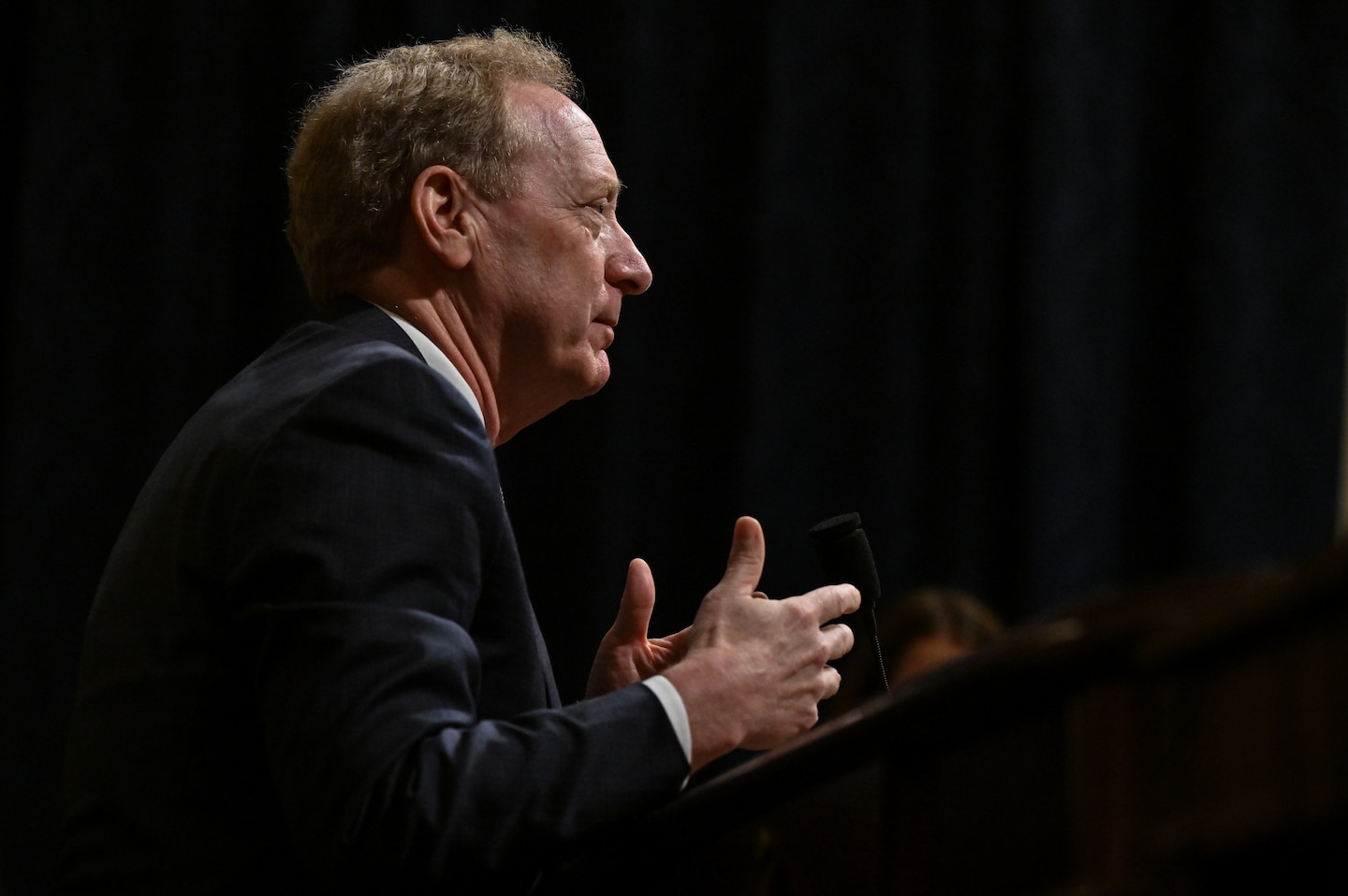

“Ultimately, the danger is not in moving too fast, but in moving too slow, or not moving at all,” the Microsoft president said. Brad Smith It was written in a policy document.

In the document, Microsoft called for the enactment of a “deepfake fraud law” that would specifically make it illegal to use AI to deceive people.

As AI gets better at generating voices and images, scammers are already using it to trick people into sending money to their loved ones. Other tech lobbyists argue that existing anti-fraud laws are enough to crack down on AI, and that the government doesn’t need to enact additional legislation.

Last year, Microsoft An independent body to regulate AIOthers argued that the FTC and DOJ have the ability to regulate AI.

Microsoft also called on Congress to require AI companies to build “provenance” tools into their AI products.

AI images and audio are already being used around the world for propaganda and to mislead voters, and AI companies are working on developing technology to embed hidden signatures into AI images and videos that can identify whether the content is AI-generated or not. However, deepfake detection is notoriously unreliable. And some experts question whether it will ever be possible to reliably separate AI content from real images and sounds.

State and Congress should also update laws addressing the creation and sharing of sexually exploitative images of children and intimate images without their consent, Microsoft said. AI tools are already being used to create sexual images and sexual images of children against their will.

Government Scanner

Hill’s Affair

Senators turn to online content creators to push bill (Taylor Lorenz)

Inside the Industry

Trump v. Harris is splitting Silicon Valley into opposing political camps (Trisha Thadani, Elizabeth Dwoskin, Nitasha Tyk, Gerrit de Vink)

TikTok has a Nazi problem (Wired)

Amazon paid about $1 billion for Twitch in 2014. It’s still losing money. (The Wall Street Journal)

Competition Watches

trend

How Elon Musk came to support Donald Trump (Josh Dorsey, Eva Doe, Faiz Siddiqui)

A Field Guide to Spotting Fake Photos (Chris Velazco and Monique Woo)

AI gives weather forecasters a new edge (New York Times)

diary

- Information Technology and Innovation Foundation Host an event“Can China Innovate with EVs?” Tuesday at 1 p.m., 2045 Rayburn House Office Building.

- Consumer Technology Association Host a conversation With the White House National Cyber Director Harry Coker Jr.It will be held Tuesday at 4 p.m. at the CTA Innovation House.

- Senate Budget Committee Hold a public hearing A talk on the future of electric vehicles will be held at 10 a.m. Wednesday in the 608 Dirksen Senate Office Building.

- The Center for Democracy and Technology Virtual Eventswill host “What You Need to Know About Artificial Intelligence” on Wednesday at noon.

- sense. Ben Ray Lujan (D.N.M.) and Alex Padilla (Democrat, California) Host a public panel“Combating Digital Election Disinformation in Languages Other Than English,” Wednesday at 4 p.m., Dirksen Senate Office Building, Room G50.

- The U.S. General Services Administration Federal AI HackathonThursday 9am

Before you log off

That’s all for today. Thank you for joining us and encourage others to subscribe. TFor each overview, please contact Cristiano (by email or Social media) and Will (email or Social mediaFor tips, feedback, greetings, etc., please contact us at .