OpenAI opened the door to military applications for its technology with an unannounced update to its usage policy. Previous policy prohibited the use of its products for “military and war” purposes, but that language has now disappeared, and OpenAI did not deny that military use is now possible.

intercept I noticed a change for the first timeseems to have been released on January 10th.

The technology industry sees unannounced changes in policy wording fairly frequently as the products that govern their usage evolve and change, and OpenAI is clearly no exception. In fact, some changes may be necessary, as the company recently announced that its user-customizable GPT will be rolled out to the public alongside a vaguely articulated monetization policy.

However, changes to non-military policy are unlikely to be the result of this particular new product. Nor can you credibly claim that the “military and war” exclusion is simply “clearer” or “easier to read,” as in his OpenAI statement regarding this update. This is a substantive and consequential policy change and is not a restatement of the same policy.

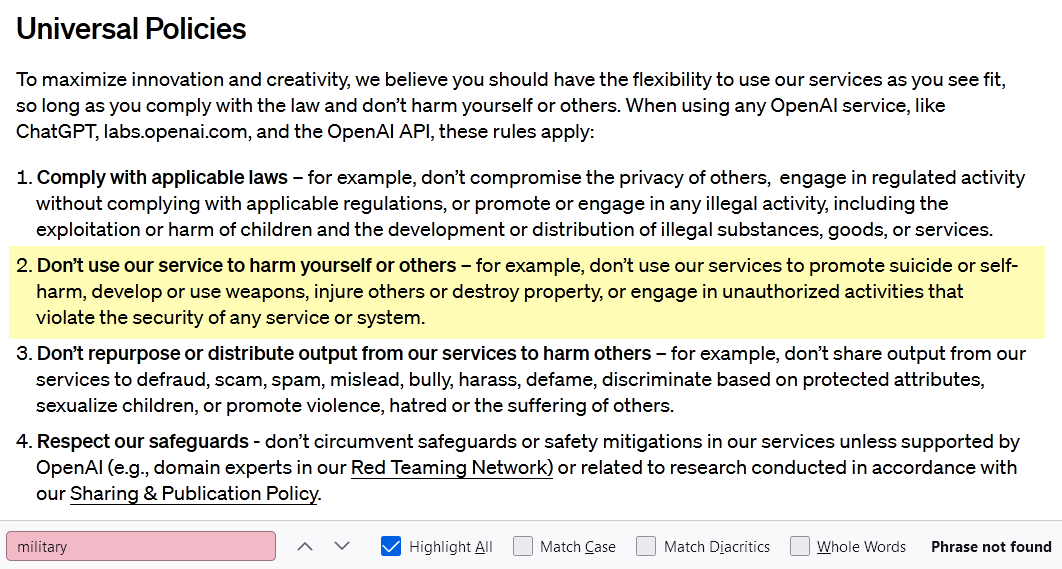

You can read our current usage policy hereand the old one here. Here’s a screenshot with the relevant parts highlighted:

Before the policy change. Image credits: OpenAI

After policy change. Image credits: OpenAI

It’s obviously been rewritten entirely, but whether it’s more readable is a matter of taste. I believe that a bulleted list of clearly prohibited actions is easier to read than a more general guideline. However, OpenAI’s policy makers clearly believe this is not the case, and that this gives them more latitude to interpret practices that were previously completely prohibited, either favorably or unfavorably. Then it’s just a happy side effect.

However, as OpenAI representative Nico Felix explained, the development and use of weapons is still completely prohibited, and was originally listed separately under “Military and Warfare.” After all, the military doesn’t just make weapons; weapons are made by people outside the military.

And it is precisely where these categories do not overlap that I suspect OpenAI is considering new business opportunities. Not all of the Department of Defense’s activities are strictly war-related. As any academic, engineer, or politician knows, military authorities are deeply involved in all kinds of basic research, investment, small business funding, and infrastructure support.

OpenAI’s GPT platform could be very useful, for example, to Army engineers looking to summarize decades of documentation on local water infrastructure. For many companies, the real challenge is how to define and deal with relationships with government and military funding. Google’s Project Maven famously went one step too far, but few seemed to care all that much about his multibillion-dollar JEDI cloud deal. His GPT-4 may be fine for academic researchers receiving grants from the Air Force Research Laboratory to use, but researchers within his AFRL working on the same project cannot. Where do you draw the line? Even a strict “non-military policy” should stop after a few deletions.

That said, the complete removal of “military and war” from OpenAI’s prohibited uses suggests that the company is at least open to serving military customers. I asked the company if they would confirm or deny that this was the case, and warned them that the language of the new policy makes it clear that anything other than a denial will be interpreted as confirmation.

As of this writing, they have not responded. I’ll update this post if I get a response.