OpenAI’s video generation tool Sora wowed the AI community in February with smooth, realistic videos that appeared far ahead of its competitors. But the carefully stage-managed debut left out many details. The details were filled in by a filmmaker who was given early access to create a short using Sora.

Shy Kids is a Toronto-based digital production team selected by OpenAI as one of only a few. produce a short film Although it was primarily for the purpose of promoting OpenAI, we were given considerable creative freedom. When creating an “air head”. in Interview with visual effects news outlet fxguidepost-production artist Patrick Cederberg explained that he “actually uses Sora” as part of his job.

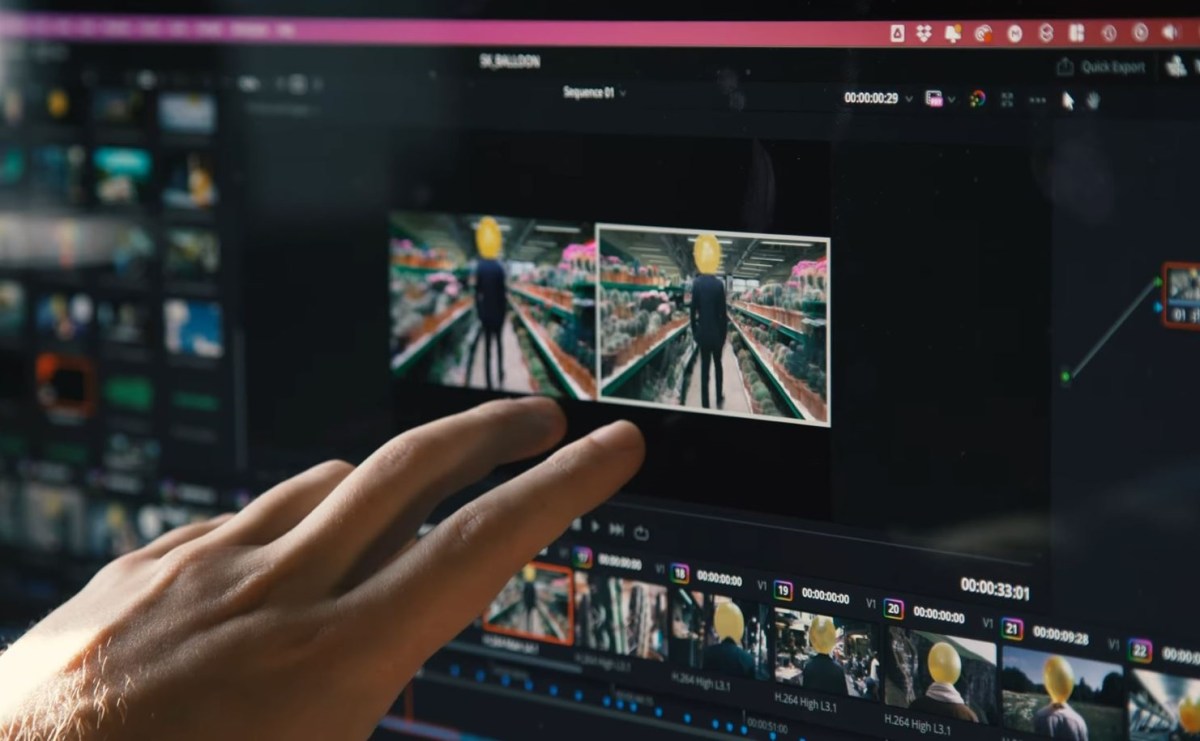

Perhaps the most important point for most people is simply this. His OpenAI post highlighting the short stories leads readers to believe they are more or less fully formed from Sora, but in reality, these are made with robust storyboarding, editing, color correction, and rotoscoping. It is also possible to post work such as and his VFX. Just as Apple says “shot on an iPhone” but doesn’t show the studio setup, professional lighting, or color work after the fact, Sora’s post shows that it’s not how people actually shot it. It only talks about what you can do with it.

Cederberg’s interview is interesting and completely non-technical, so if you’re at all curious. Visit fxguide to read. But some interesting things about using Sora indicate that while this model is impressive, it’s probably not as big an advance as we think.

At the moment, control remains both the most desirable and the most elusive. …The closest we could get was to be very descriptive with the prompts. Describing the characters’ wardrobes and balloon types was a way to avoid inconsistency. Because we haven’t yet established the feature set to fully control consistency from shot to shot, generation to generation.

In other words, even simple problems in traditional filmmaking, such as choosing the color of a character’s clothing, require elaborate workarounds and checks in the production system, as each shot is created independently of the others. Become. That could obviously change, but it’s certainly a lot more work at the moment.

Sora’s output also needed to be monitored for unnecessary elements. Cederberg described how the model would periodically generate a face on the balloon, either as a string hanging from the main character’s head or in front of him. If you weren’t prompted to exclude them, you had to remove them later, which was also a time-consuming process.

Precise timing and movement of characters and cameras is practically impossible. “There’s a little bit of temporal control over where these different actions occur in the actual production, but it’s not precise…it’s kind of a shot in the dark,” he said. Cederberg.

For example, the timing of wave-like gestures is a highly approximate, suggestion-driven process, unlike manual animation. And shots like those panning upwards over a character’s body may or may not reflect the filmmaker’s wishes. So in this case, the team rendered the shot composed in portrait orientation and performed a crop pan in post. The resulting clips were often in slow motion for no particular reason.

An example of a shot from Sora and how it ended up short. Image credits: shy kids

In fact, Cederberg said that the use of everyday filmmaking terms such as “pan right” and “tracking shot” is generally contradictory, and that the team found this quite surprising. Ta.

“Researchers weren’t thinking like filmmakers before they approached artists to play with this tool,” he says.

As a result, the team ran hundreds of generations, each lasting 10 to 20 seconds, but ended up using only a handful. Mr. Cederberg estimated the ratio to be his 300:1, but of course, in normal shooting, anyone would probably be surprised by that ratio.

Actually the team I made a little behind the scenes video If you’re interested, they describe some of the issues they encountered. Like a lot of AI-related content. Comments are quite critical of the whole effort — but not as nefarious as the AI-assisted ads recently exposed.

A final interesting point concerns copyright. When he asks Sora to give him a Star Wars clip, he refuses. And even if you try to get around it by using “a robed guy with a laser sword in a retro-futuristic spaceship,” she rejects that too because some mechanism recognizes what you’re trying to do. will be done. She also refused to do “Aronofsky-esque shots” or “Hitchcockian zooms.”

On the one hand, it makes perfect sense. But it begs the question. If Sora knows what these are, does that mean the model was trained on that content and it better know it’s infringing? I keep my training data card close to my vest. Interview with CTO Mira Murati and Joanna Stern — almost certainly won’t tell us.

When it comes to Sora and its use in filmmaking, it’s clear that Sora is a powerful and useful tool, but its role is not to “make movies perfectly.” still. As another villain once famously said, “It comes later.”