× close

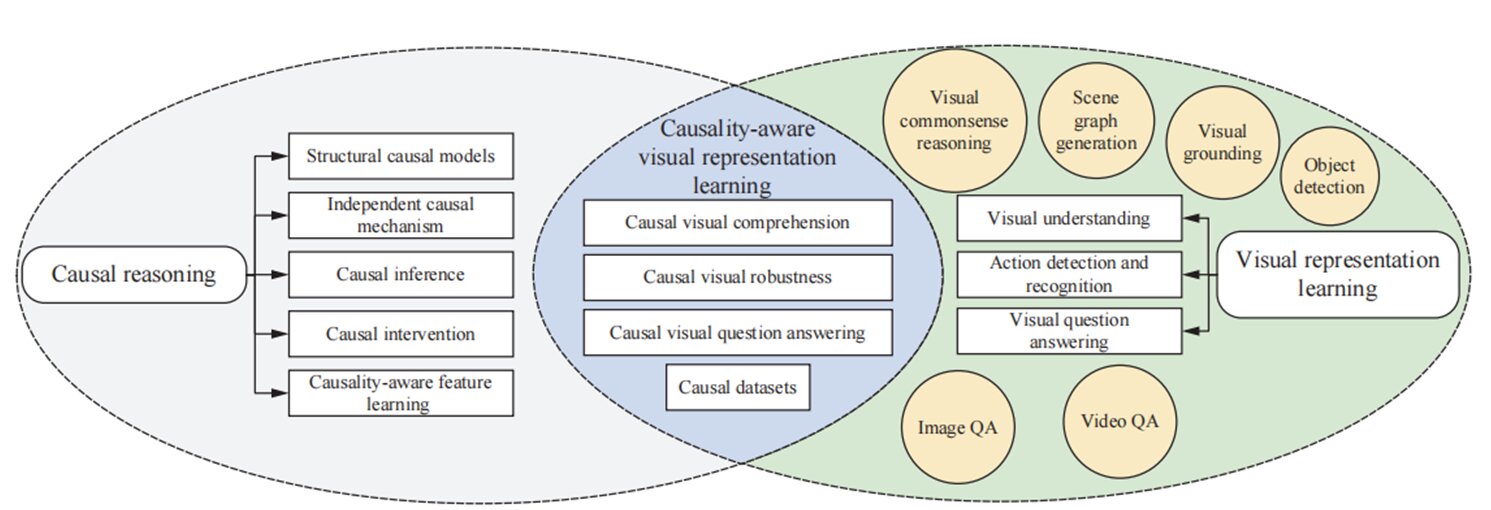

An overview of the structure of this paper, including related methods, datasets, challenges, and a discussion of the relationship between causal inference, visual representation learning, and their integration.Credit: Beijing Zhongke Journal Publishing Co., Ltd.

With the advent of large amounts of heterogeneous multimodal data, such as images, videos, text/language, audio, and multisensor data, deep learning-based techniques are showing promise for a variety of computer vision and machine learning tasks, including: Shows performance. Visual understanding, video understanding, visual language analysis, and multimodal fusion.

However, existing methods rely heavily on fitting data distributions, tend to capture spurious correlations from different modalities, and lack multimodal knowledge with good generalization and cognitive abilities. It is not possible to learn certain essential causal relationships.

Inspired by the fact that most of the data in the computer vision society is independent and identically distributed (IID), much literature has focused on data augmentation, pre-training, self-monitoring, and new methods to improve state robustness. architecture is adopted. State-of-the-art deep neural network architecture. However, it is argued that such strategies only learn correlation-based patterns (statistical dependencies) from the data and may not generalize well without guarantees of IID settings.

Causal inference offers a promising alternative to correlational learning, as its strong ability to reveal fundamental structural knowledge about the data generation process allows interventions to be well generalized across different tasks and environments.

Recently, causal inference has become popular in a myriad of high-impact areas within computer vision and machine learning, including interpretable deep learning, causal feature selection, visual understanding, visual robustness, visual question answering, and video understanding. It is attracting more and more attention. A common challenge for these causal methods is how to build powerful cognitive models that can fully discover causal and spatiotemporal relationships.

In their paper, the researchers provide a comprehensive overview of causal inference in visual representation learning, attracting attention, stimulating discussion, and bringing to the fore the urgency of developing new causal-based visual representation learning methods. That’s what I’m aiming for.

There are several studies on causal inference, but these studies are aimed at general representation learning tasks such as unconfounding, out-of-distribution (OOD) generalization, and basisization.

The work is published in a diary Machine intelligence research.

Uniquely, this paper focuses on a systematic and comprehensive survey on causal inference, learning visual representations, and their integration with related research, datasets, insights, and future challenges. . To present the review more concisely and clearly, this paper selects and cites relevant works, taking into account the source, year of publication, influence, and scope of the different aspects of the topic investigated in this paper. To do.

Overall, the main contributions of this work are:

First, we will discuss the basic concepts of causality, the structural causal model (SCM), the independent causal mechanism (ICM) principle, causal inference, and causal intervention. Then, based on the analysis, this paper further provides some directions for making causal inference in visual representation learning tasks. This paper may be the first to suggest potential research directions on causal visual representation learning.

Second, in order to make causal visual representation learning more efficient, a prospective review is introduced to systematically and structurally evaluate existing works following efforts in the above directions. Researchers focused on the relationship between learning visual representations and causal inference, and sought to improve our understanding of why and how existing causal inference methods aid in learning visual representations. deepen and provide inspiration for future studies and research.

Third, the new paper explores and discusses future research areas and open questions related to the use of causal inference techniques to address visual representation learning. This can encourage and support the expansion and deepening of research in related fields.

Section 2 provides a five-part preparation. The first part is the basic concept of causation. Causal relationship learning is different from statistical learning, which aims to discover causal relationships that go beyond statistical relationships. Learning causal relationships requires machine learning techniques that not only predict the results of IID experiments but also reason in terms of causal relationships.

The second part is SCM, which considers a causal style formulation. The third part is the ICM principle, which describes the independence of causal mechanisms. The fourth part is causal inference, the purpose of which is to estimate the change in outcome (or effect) of different treatments. The last part is causal intervention. This aims to capture the causal effects of interventions (i.e., variables) and exploit causal relationships within the dataset to improve model performance and generalization ability.

Traditional feature learning methods typically learn spurious correlations introduced by confounding factors. This reduces the robustness of the model and makes it difficult to generalize the model across domains. Causal inference, a learning paradigm that uncovers actual causal relationships from results, overcomes the inherent flaws of correlational learning and learns features that are robust, reusable, and reliable.

Section 3 reviews recent representative causal inference methods for general feature learning. This method mainly consists of three main paradigms: 1) embedded structural causal models (SCMs), 2) applying causal interventions/counterfactuals, and 3) features based on Markov boundaries (MBs). Selection of.

Visual representation learning has made great progress in recent years, and uses spatial or temporal information to improve visual understanding (object detection, scene graph generation, visual grounding, visual common sense reasoning), action detection, and more. It allows you to complete certain tasks such as recognition, and visual comprehension. Visual question answering and more.

In Section 4, researchers introduce these representative visual learning tasks and discuss the existing challenges and need for applying causal inference to visual representation learning.

According to the visual representation learning methods discussed above, current machine learning, especially representation learning, suffers from 1) lack of interpretability, 2) lack of generalization ability, and 3) overreliance on correlations in data distribution. are facing several challenges. Causal inference provides a promising alternative to address these challenges.

Causal discovery helps reveal the causal mechanisms behind the data, allowing machines to better understand why and make decisions through intervention and counterfactual reasoning.

In Section 5, researchers summarize some recent approaches to causal visual representation learning. Visual representation learning is an emerging research topic that has emerged since the 2020s. The tasks involved can be broadly categorized into several major aspects: 1) causal visual understanding, 2) causal visual robustness, and 3) causal visual question answering. . In this section, researchers describe visual representations of these three representative causal relationships for learning tasks.

Correlation-based models may perform well on existing datasets not because these models have strong inference capabilities, but because these datasets do not allow complete evaluation of the model’s inference capabilities. Because we can’t support it. False correlations within these datasets can be exploited by models to commit fraud. This means that the model focuses on superficial correlation learning and only approximates the distribution of the dataset, rather than actual causal inference.

For example, in the VQA v1.0 dataset for the VQA task, the model simply answers “yes” to the question “Do you see…?” This achieves an accuracy of almost 90%. This shortcoming of current datasets requires researchers to construct benchmarks that can assess a model’s true causal inference ability.

In Section 6, researchers use image question answering benchmarks and video question answering benchmarks as examples to analyze the current state of research in related causal inference datasets and indicate future directions.

Section 7 suggests and discusses some future research directions. Causal inference using visual representation learning has various applications. Modeling causal inference for different tasks allows us to better perceive the real world. In this section, the researcher will present applications from his five aspects: image/video analysis, explainable artificial intelligence, recommendation systems, human-computer interaction and interaction, and crowd intelligence analysis.

We also discuss how causal inference can benefit a variety of real-world applications.

Some researchers have successfully implemented causal inference in visual representation learning to discover causal and visual relationships. However, causal inference for visual representation learning is still in its early stages, and many challenges remain unresolved. Therefore, in Section 8, we highlight some possible research directions and open questions to encourage more extensive and in-depth research on this topic.

Potential research directions for causal visual representation learning can be summarized as follows.

- More rational causal modeling

- More accurate approximation of intervention distribution

- A better counterfactual synthesis process

- Large-scale benchmarking and evaluation pipeline

This paper provides a comprehensive survey of causal inference in visual representation learning. The researchers hope that this study will draw attention, foster discussion, and make public use of new causal inference techniques to more efficiently learn reliable visual representations and related real-world applications. We hope that this will help bring to the forefront the urgency of developing possible benchmarks and consensus standards.

For more information:

Yang Liu et al., Combining causal inference and visual representation learning: A prospective study, Machine intelligence research (2022). DOI: 10.1007/s11633-022-1362-z

Provided by: Beijing Zhongke Magazine Publishing House