The tool, called Nightshade, messes with training data in ways that can severely damage the AI models that generate the images.

New tools allow artists to make invisible changes to the pixels in their art before they upload it online, so the resulting model has chaotic predictions when fed into an AI training set. It can break in impossible ways.

The tool, called Nightshade, is intended as a way to fight back against AI companies that use artists’ work to train models without the creator’s permission. When used to “contaminate” this training data, some of the output becomes useless, potentially damaging future iterations of image-generating AI models such as DALL-E, Midjourney, and Stable Diffusion. (turns into a cat, a car turns into a cow, etc.) forward. MIT Technology Review obtained an exclusive preview of the research submitted for peer review at the computer security conference Usenix.

AI companies such as OpenAI, Meta, Google, and Stability AI are facing numerous lawsuits from artists who claim their copyrighted material and personal information has been scraped without their consent or compensation. Ben Zhao, a professor at the University of Chicago who led the team that developed Nightshade, said Nightshade provides power from AI companies to artists by creating a strong deterrent against disrespecting artists’ copyrights and intellectual property. He says he hopes it will help reverse the balance. property. Meta, Google, Stability AI, and OpenAI did not respond to MIT Technology Review’s requests for comment on how they would respond.

Don’t be satisfied with only half the story.

Access the latest technology news without a paywall.

Subscribe now

Already a subscriber? Sign in

Mr. Zhao’s team also developed glaze, a tool that allows artists to “mask” their personal style to prevent it from being scraped by AI companies. This works in a similar way to Nightshade. It changes pixels in an image in subtle ways that are invisible to the human eye, but manipulates machine learning models to interpret the image as something different than what it actually shows.

The team plans to integrate Nightshade into Glaze, allowing artists to choose whether or not to use data poisoning tools. The team is also open sourcing Nightshade so others can play with it and create their own versions. The more people use it and create their own versions, the more powerful the tool becomes, Zhao says. Data sets for large AI models can consist of billions of images, so the more contaminated images that can be fed into the model, the more damage this technique can cause.

targeted attack

Nightshade exploits security vulnerabilities in generative AI models. This arises from the fact that generative AI models are trained on vast amounts of data, in this case images collected from the internet. Nightshade ruins those images.

Artists who want to upload their work online but don’t want their images scraped by an AI company can choose to upload their work to Glaze and mask it with an art style different from their own. Then you can also choose to use Nightshade. As AI developers scrape the internet to get more data to tune existing AI models or build new ones, these contaminated samples find their way into the model’s dataset. This will cause the model to malfunction.

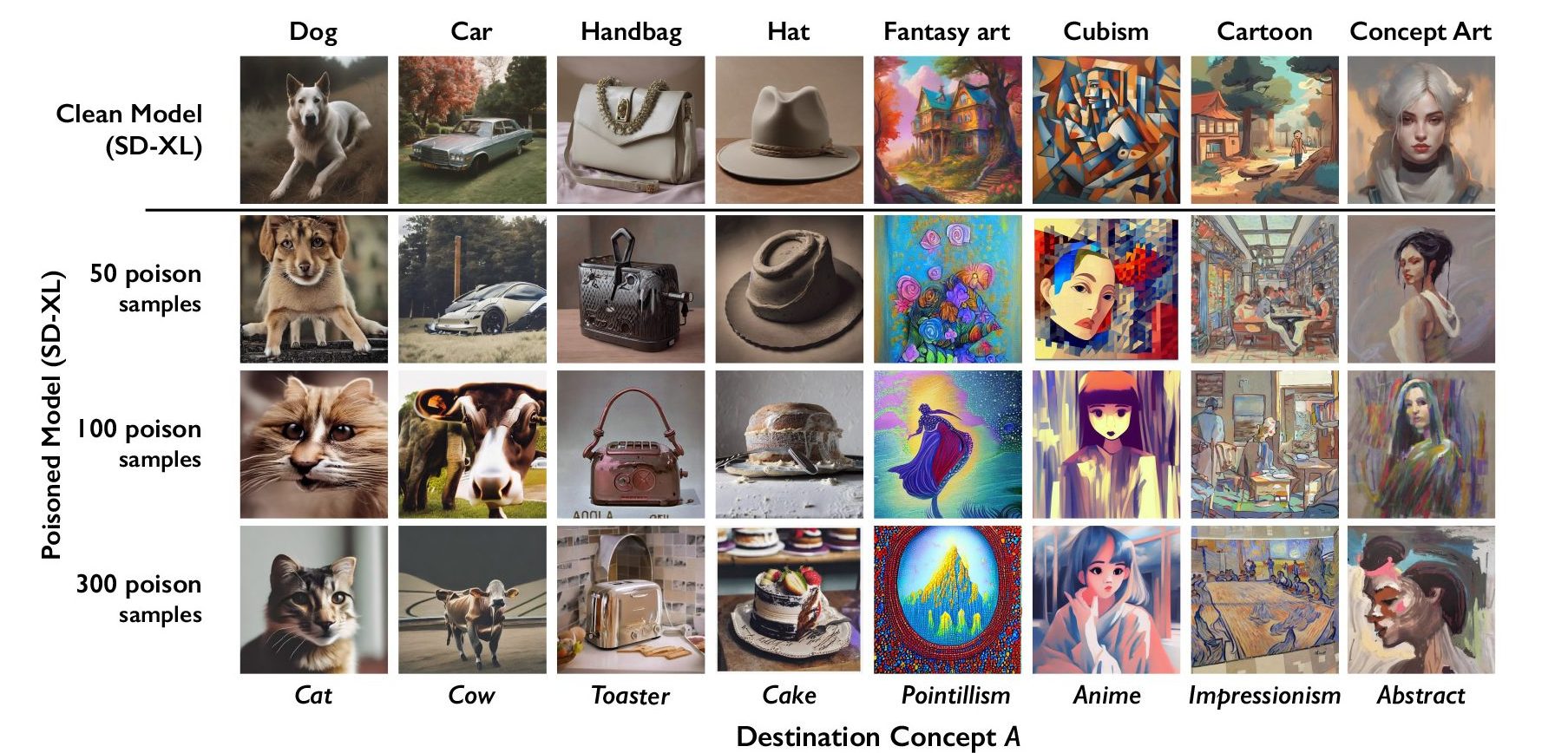

The poisoned data samples can be used to manipulate a model to learn, for example, that an image of a hat is a cake and an image of a handbag is a toaster. Removing contaminated data is extremely difficult, as technology companies must painstakingly find and remove each corrupted sample.

The researchers tested their attacks against Stable Diffusion’s latest model and an AI model they trained from scratch. He provided just 50 images of the dogs they poisoned his Stable Diffusion with, and when he encouraged them to create images of the dogs themselves, the output started to look weird. It was a creature with too many limbs and a cartoon-like face. With 300 poisoned samples, an attacker can manipulate stable diffusion to generate an image of a dog that looks like a cat.

Provided kindly by researchers

Generative AI models are good at creating connections between words, which helps spread poison. Nightshade not only infects the word “dog” but all similar concepts such as “puppy”, “husky”, and “wolf”. Poison attacks also work on tangentially related images. For example, if a model scrapes a poisoned image with the prompt “Fantasy Art”, the prompts “Dragon” and “Castle” will appear. Lord of the Ring” is similarly manipulated to become something else.

Provided kindly by researchers

Zhao acknowledged that there is a risk that people will abuse data poisoning techniques and use them for malicious purposes. But attackers are trained on billions of data samples, so they would need thousands of poisoned samples to do real damage to larger, more powerful models, he says. .

“We don’t yet know robust defenses against these attacks. We haven’t seen any toxic attacks on modern humans yet. [machine learning] Cornell University professor Vitaly Shmachkov, who studies the security of AI models, was not involved in the study, but it may only be a matter of time. “Now is the time to work on defense,” Shmachikov added.

Gautam Kamath, an assistant professor at the University of Waterloo who studies data privacy and robustness in AI models but was not involved in the study, said the research is “excellent.”

According to the study, vulnerabilities “won’t magically disappear with these new models, they’ll just become more severe,” Kamath said. “This is especially true because the stakes will only increase over time as these models become more powerful and people trust them more.”

strong deterrent force

Junfeng Yang, a computer science professor at Columbia University who has researched the security of deep learning systems but was not involved in the study, said he hopes Nightshade will make AI companies more respectful of artists’ rights. , says it could have a major impact. , by increasing the willingness to pay royalties.

AI companies that have developed generative text-to-image models, such as Stability AI and OpenAI, have proposed allowing artists to opt out of having their images used to train future versions of their models. But artists say this isn’t enough. Eva Tourenent, an illustrator and artist who has used Glaze, said the opt-out policy requires artists to jump through hurdles and still give full control to the tech company.

Tourenent hopes Nightshade will change that.

“it is [AI companies] Think about it, please. “Because they could destroy the entire model by taking our work without our consent,” she says.

Another artist, Autumn Beverly, says tools like Nightshade and Glaze have given her the confidence to post her work online again. She previously discovered that this image had been collected into her popular LAION image database without her consent and removed it from the internet.

“I’m really grateful that there are tools that allow artists to take power back into their own work,” she says.