Just as businesses adapt their software to run on a variety of desktop, mobile, and cloud operating systems, they must also configure their software for the rapidly advancing AI revolution. The AI revolution is introducing large-scale language models (LLMs) that provide powerful new services. An AI application that can interpret and generate text in human language.

While companies can already create “LLM instances” of their software based on current API documentation, the question is to ensure that the broader LLM ecosystem can properly use it, and how well does this instance work? It is necessary to fully visualize what is happening. Their products are actually put into practical use.

And that is, in fact, the flow of the tide is stepping into the solution with an end-to-end platform that allows developers to better integrate their existing software with the LLM ecosystem. The fledgling startup today announced a $1.7 million funding round co-led by Google’s Gradient Ventures and his VC firm Dig Ventures, founded by MuleSoft founder Ross Mason, with Antler’s participation. I emerged from stealth.

confidence

Consider the following hypothetical scenario. Online travel platforms have decided to introduce LLM-enabled chatbots such as ChatGPT and Google’s Bard to allow customers to request airfares and book tickets through natural language prompts in search engines. . So the company creates his LLM instance for each, but as far as they know, 2% of ChatGPT’s results provide a destination that the customer did not request, and Bard’s error rate is even higher. It can be expensive. This is not possible. I know for sure.

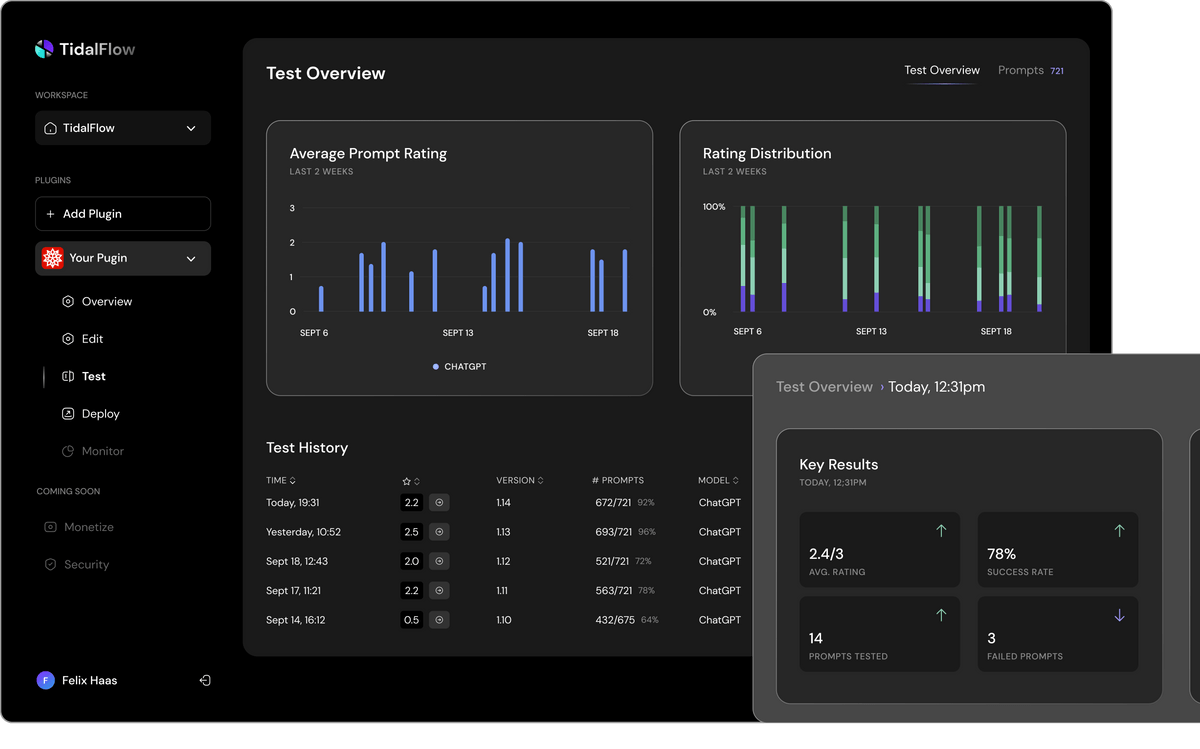

Now, you might feel more secure if your company has less than 1% resiliency. do not have Continue down the generative AI route until you have more clarity on the actual performance of your LLM instance. This is where Tidalflow comes in, offering modules that help a company not only create his LLM instance, but also test, deploy, monitor, secure, and ultimately monetize it. You can also fine-tune your LLM instance of the product for each ecosystem in a local simulated sandbox environment until you arrive at a solution that meets your fail-tolerance thresholds.

“The big problem is that when you launch something like ChatGPT, you don’t actually know how users are interacting with it,” Tidalflow CEO sebastian jorna he told TechCrunch. “This lack of confidence in software reliability is a major barrier to deploying software tools into his LLM ecosystem. Tidalflow’s testing and simulation module builds that confidence.”

Tidalflow is probably best described as an application lifecycle management (ALM) platform that companies plug into OpenAPI specifications/documentation. And on the other end, Tidalflow spits out a “battle-tested LLM instance” for its product, allowing the frontend to monitor and observe how that LLM instance actually performs.

“In typical software testing, you run a certain number of cases, and if it works, the software works,” Jorna says. “We’re in this stochastic environment, so you really have to put in a lot of volume to get statistical significance. And that’s basically what we do in our testing and simulation module. What we’re doing is simulating the product as if it were already in operation and simulating how potential users would use it.”

Tidal flow dashboard. Image credits: the flow of the tide

In short, Tidalflow allows businesses to navigate countless edge cases that may or may not break their fancy new generative AI smarts. This is especially important for large enterprises where the risk of compromising software reliability is too great.

“Large corporate clients can’t risk putting something out there unless they’re confident it will work,” Jorna added.

From foundations to funding

Tidalflow’s Coen Stevens (CTO), Sebastian Jorna (CEO), and Henry Wynaendts (CPO). Image credits: the flow of the tide

Tidalflow is officially 3 months old, with founder Jorna (CEO) and coen stevens (CTO) through meetings antlersEntrepreneurship Residency Program in Amsterdam. “Once the official program was launched in the summer, Tidal Flow became the first company in Antler Netherlands’ history to receive funding,” Jorna said.

Currently, Tidalflow claims a team of three people, including two co-founders and a chief product officer (CPO). henry weinent. But with $1.7 million in new funding, Giorna said: is currently working toward full commercialization and is actively recruiting for a variety of front-end and back-end engineering roles.

But most of all, the rapid turnaround from founding to funding is indicative of the current generative AI gold rush. Get the API using ChatGPT and Third party plugin supportGoogle is making similar efforts for the Bard ecosystem. Microsoft Embed Copilot AI Assistant in Microsoft 365 gives businesses and developers a huge opportunity to not only leverage generative AI in their products, but also reach massive numbers of users in the process.

“Just as the iPhone ushered in a new era of mobile-enabled software in 2007, we are now at a similar tipping point where software becomes LLM compatible,” Giorna said. Ta.

Tidalflow remains in closed beta for now, but is expected to be publicly available by the end of 2023.