Orlich Lawson

Fellow members of the legendary Computer Bug Trial, honored guests, if I may ask you to listen? I would like to respectfully submit a new candidate for your esteemed judgment. You may or may not consider it new. You may even call it a “bug”. But I’m sure you will find it interesting.

Consider NethackThis is one of the best roguelikes ever made. And I mean that in the strictest sense of the word: content is procedurally generated, death is permanent, and only skill and knowledge is retained between games. I understand that the only thing two roguelike fans can agree on is how wrong a third’s definition of roguelike is, but please, let’s move on.

Nethack Great for machine learning…

It’s a challenging game, full of important choices and random challenges, but it’s also a “single agent” game that can be generated and played super fast on modern computers. Nethack People involved in machine learning Imitation learningin fact, Jens Tuils’s paper How computing scaling affects single-agent game learning. Using Tuyls’ expert model. Nethack For behavior, Bartłomiej Cupiał and Maciej Wołczyk trained neural networks to play and improve themselves. Reinforcement learning.

By mid-May of this year, their model was consistently earning 5,000 points on its own criteria. But in one run, the model suddenly scored about 40 percent worse, earning 3,000 points. Machine learning generally tends to move in one direction over time on these types of problems. This didn’t make sense.

Cupiał and Wołczyk were able to revert the code, restore the entire software stack from a Singularity backup, CUDA A library. The result? 3,000 points. If you rebuild everything from scratch, you still have 3,000 points.

Nethackplayed by ordinary people.

… except on certain nights

As detail According to Cupiał’s X (formerly Twitter) thread, this took several hours of chaotic trial and error by him and Wołczyk.“I’m beginning to feel like I’m going mad. I can’t even watch a TV show without thinking about bugs all the time,” Cupiau wrote. In despair, he asked model writer Tuils if he knew what the problem was. When he woke up in Krakow, he heard the answer:

“Oh yeah, it’s probably a full moon today.”

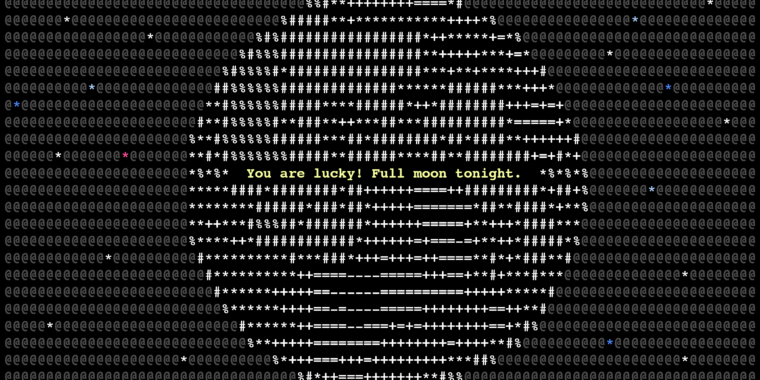

in Nethacka game developed by the development team I’ve thought of everything.If the game detects that it is a full moon from the system clock, it will display the message “Lucky you! Tonight is a full moon.” Some player perks: One extra point of luck and the creature mostly maintained its animal form.

Overall, this is an easier game, so why does the learning agent score lower? The learning agent’s training data doesn’t have data about the full moon variable, so a series of branching decisions could lead to lower results, or even confusion. Krakow When scores like 3000 started coming in. What a terrible night for the learning model.

Of course, the “score” is not a true indicator of success. Nethackas Cupiau himself also points outIf you ask a model to get the highest score possible, it will never get bored and will kill tons of low-level monsters.”Find the items you need [ascension] or [just] “Executing the quest is too taxing for a pure RL agent,” Kupiar wrote. Automatic promotiondoes a better job of progressing through the game, “but it still only lets you solve the Sokoban and reach the edge of the mine,” Cupiał says. Note.

Is it a bug?

I Nethack This quirky and highly incomprehensible stop in the journey of machine learning, which responded to the full moon as intended, is surely a bug and worthy of a hall of fame induction. Harvard Moth,Also, 500 Mile Mailbut what is it?

The team used Singularity In order to back up and restore the stack, they inadvertently carried forward time on the machine, and the bug reoccurred every time they tried to solve it. The machine’s resulting behavior was so strange and seemed based on invisible forces that it infuriated the programmers. And so this story has a beginning, a climaxing middle, and an ending that will teach you something, no matter how confusing it may be.

of Nethack I think the Lunar Learning Bug is worth celebrating. Thank you for your time.